Training for the job title's beast

Hi, my name is Lina 👋

I am a first-year master's student in Computer Science, Decision Making, and Data at Paris-Dauphine University (opens in a new tab). I joined Louis for a 2-month internship in data and machine learning.

Thanks to AI (in fact, machine-learning), we help companies make sense of their data in a very concrete way. Instead of an all-in-one, complex data platform, we're building APIs that small teams can integrate into their automation processes to build their own solutions. For example, we can help companies identify, filter, and take charge of their inbound leads so they can focus on converting the most interesting people and leave out the rest to automation.

Having good quality data is important as we need to understand who we are dealing with to qualify the leads appropriately.

Getting to know the terrain 🗺️

Part of the qualification process is understanding the lead's job - the job title.

First, we need to acquire it. Easy enough, there are at least dozens (opens in a new tab) of enrichment (opens in a new tab) software (opens in a new tab) popping up every week.

But the problem with job titles is that they can quickly get unusable when trying to automate work based on it (which is often the case in marketing).

"CEO", "VP of Engineering", and "Director of Human resources" are pretty common titles. A simple text comparison, v-lookup, or regular expressions for the most tech-friendly among us would do the trick. However, how would you categorize a "Head of decarbonization", "Talent acq. manager"? (note the abbreviation).

Because there is a potentially infinite number of job titles, I needed to find a better approach.

Getting someone's information is easy. Making sense of it is not

The main goal here was to build a model that would be able to categorize job titles into two properties: department and responsibility.

- Department is a limited list of areas within a company that we could link a title to. Linkedin offers a good starting point with 146 job departments. We want to be cautious about adding more as it would become impractical for categorization purposes.

For example -> DHR, HR, Talent hunter, talent acq. manager and People's management would all fit in the "Human Resource" department.

- Responsibility is pretty much self-explanatory and indicates the level the person operates at. Notice we don't use "seniority" as there is a bias towards an age that does not always reflect the responsibility level. ie: you could be a technician in place for a long time or be a young and newly appointed head of brand.

For example:

- Head of Growth -> Top responsibility

- Sales representative intern -> Junior responsibility

- Project manager -> Middle responsibility.

💡 Note: the granularity at which we categorize responsibility and departments may change depending on feedback.

Choosing my weapon 🏹

Categorizing job titles using simple text comparison and regular expressions was out of the equation. Could "machine learning" be our savior here?

Maybe, but there was a problem: I had 40 days, and that's not much.

So rather than dreaming of a perfect solution, I needed to have something that worked at least a bit better than the usual text comparison approach.

So rather than reinventing the wheel, I started looking up existing services that could help with text classification. I found different services such as Google Cloud AutoML, Amazon Web Services ML, and tried using them to see what results I could get. The learning and prediction parts were satisfying, but an important point was missing. Those services couldn’t provide continual/incremental learning (it's an industry challenge (opens in a new tab))

We wanted our model to learn when a job title was incorrectly classified.

After trying different approaches, I decided to implement the program myself in JavaScript. I found existing libraries that implemented classifiers and tried a few of them. I ended up using one called classificator (opens in a new tab) which is a Naïve Bayes Classifier (opens in a new tab).I chose it mainly because of one feature that other classifiers didn’t provide. It was able to give, for each prediction, metrics such as the log-likelihood (opens in a new tab), the log-probability, and the probability, for each category. Thus, we could see for each prediction the probability of the job title belonging to each department. Having those metrics was necessary to define a confidence threshold for the model.

The training ⚔️

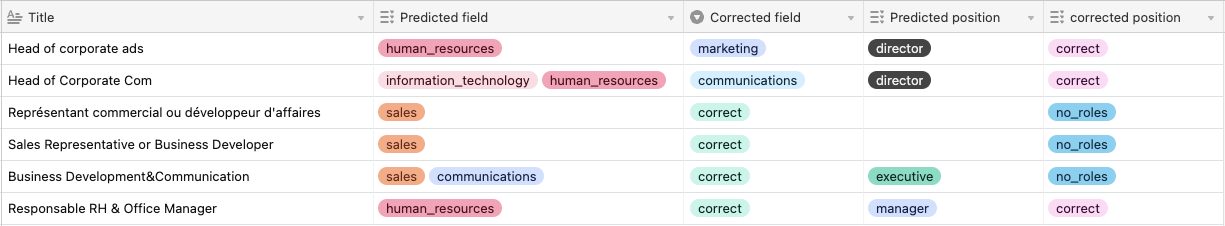

Depending on what the model had learned and its accuracy, the predictions weren’t always correct. This is why I had to find a way to correct the errors.

To set this correction system up, the program was connected to Airtable (an online database) through their API. This allows storing all the predictions as for each request, a record is created on Airtable with the job title and the predicted department. Then if the prediction is considered incorrect, we can indicate it by selecting the right department which triggers a correction request for the model to learn.

Implementing continual learning was one of the hardest tasks. As mentioned before, online services like Google cloud AutoML didn’t provide that.

When a misprediction happened; say the model categorized "nurse" in the "marketing" department, we either had to re-train the whole model (which could take days on 10k+ rows) or we had to batch the training. This was an inconceivable trade-off because if you, as a user, corrected the wrong department (marketing) with the right one (healthcare), you would expect it to give the right answer on subsequent requests.

The hunt 🦁

When the program reached a point where it was able to make good predictions according to what it had learned, it was time to put it to use and make it accessible. We used Tailwind (opens in a new tab) and NextJS (opens in a new tab) to create a landing page where requests could be made by entering a job title and clicking on the button to get the corresponding department and responsibility.

Conclusion

During this mission, I worked with time as the main constraint. I had two months to produce something that was working. Thus, the product might not be perfect in terms of prediction accuracy but it's a starting point that I leave my fellow data folks to improve upon. But more importantly, the system I've built is learning as we use it! 🔥

This mission challenged my expectations of the work of a data engineer. I thought I was going to be asked some coding tasks or more mathematically simple things as I was used to. But I faced more concrete applications of what I learned during my studies and got to learn about and use many tools that I didn't know before (Node, Vercel, Tailwind, React, NextJS, Airtable,…). All this helped me take a step back and have a more global understanding of what designing data systems meant.

I'm glad I took part in real product development and can't wait to hear your feedback, so here is the link to the landing page.

Try our job title classifier 👉 (opens in a new tab)

I'm happy to get your feedback and comments; feel free to reach me on LinkedIn (opens in a new tab)